Caused by or at least aggravated by the COVID-19 pandemic, the shipping world has seen a surge in the need for remote inspection in the last few years.

Fewer hands on deck, increased work pressure and tight work schedules puts seafarer safety at risk and may jeopardize proactive inspection and maintenance work in the maritime industries.

Maritime and offshore inspections

Current maritime and offshore inspection regimes are periodic. As such they may occur more frequently than strictly necessary – for the safety of people, property, and the environment. Better inspect too soon than too late.

But being able to move away from periodic inspection regimes to more flexible risk-based processes, potentially has huge advantages for both inspection crew and asset owners in terms of increased safety, lower cost, improved efficiency, better data coverage and quality. And robotics is the way to go. Specifically, indoor inspection drones of the flying kind.

It is not that risk-based regimes mean less inspection, but a drone allows more options when to inspect and the scaling and scope of the job. A drone inspection may for example allow you to postpone a longer stay in a shipyard for periodic control.

Drone-based inspections enable safer, more flexible yet consistent and standardized inspection processes. It will in many cases also improve inspection data quality: A drone can easily record data from areas that might otherwise be difficult to reach. Drones make it easier to get consistent data recorded from the same distances and with the same lighting conditions. The need for sending humans into dark, hazardous, confined spaces will be reduced, as will the cost related to production stops, logistics and on-site access.

Automation enables further safety and cost reduction plus a whole new dimension of benefits related to remote inspection, repeatability, and data consistency. This is a long-time dream of the industry, further accelerated by the COVID-19 pandemic.

But it is no trivial task to make autonomous drones that can do the job well. Several advanced disciplines are involved, and forces must be joined to make it possible.

Scope and motivation of REDHUS

The REDHUS project is led by DNV, a leading class society and a global inspection, testing and certification company, in collaboration with shuttle tanker owner Altera, bulk ship operator Klaveness and robotics company ScoutDI. The Autonomous Robot Lab of the Norwegian University of Technology and Science (NTNU), led by Professor Kostas Alexis, is a key research partner and the project is funded by the Research Council of Norway.

REDHUS stands for “Remote Drone-based Ship Hull Survey”, pretty much clarifying the outer scope of the project. Under the surface, these are the key technological developments in the project:

- Automated generation and verification of a drone inspection flight plan, based on metrics and simulation

- Automated execution of drone flight plus new guidelines and data standards for drone inspections

- Development of ML-based anomaly detection algorithms that can detect corrosion, cracks, coating damage and deformation with a performance comparable to a human inspector.

- A new survey process to maximize benefits from remote data collection and automated analysis.

In summary: The overarching goal is to demonstrate a remote ship hull or tank survey process based on automated drone inspection and automated analysis of the video data from the drone.

Making this process a future standard will enable increased safety and economic gains for ship owners. Consistently providing higher data quality and coverage from inspection, improves the class process and makes further improvements possible long-term. New market opportunities arise for drone service suppliers and of course, the technology providers. It’s a win-win situation with inherent benefits for all involved.

So how are we doing?

Fog-of-war

The main goal of ScoutDI in this project is “automated execution of drone flight”. We need to develop the required autonomy to do the path-following part: The drone must be able to ingest a data file that tells it which exact flight path to follow in a given volume, and when launched into the same volume, the drone must be able to follow that path and inspect predetermined Points of Interest (POIs) along the route. Thus, location and orientation must be as prescribed throughout the entire flight, with relatively high accuracy.

The Scout 137 Drone does a lot of the job already: Using an ingenious combination of 3D LiDAR technology, two vertical lasers plus an IMU and a powerful edge-computing processor, the Scout 137 makes a local 3D map of the surroundings and knows its own position in it at any time. This powers the drone’s anti-collision system and paves the way for further autonomy functions, while also providing location-tagging of all inspection data and live situational awareness to the inspection crew. It works like an indoor GPS with centimeter accuracy.

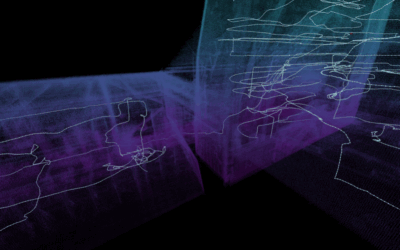

But even with a LiDAR sensor, the drone cannot know what is around the corner until it has been around the corner. At any instant, the LiDAR image of the volume you’re flying in, is based on the present moment plus some amount of memory. Any unseen feature is temporarily a black hole of nothingness. If you have some experience with real-time strategy games of the Age of Empires type, you may know this concept as “Fog of war.”

The real world is full of volumes where a LiDAR cannot see everything from just a single position. So you must go around those corners and collect data along the entire path (at least) to be able to follow the path. And we need to teach the drone some strategies to handle this chicken-and-egg situation.

There are two main strategies:

- Give the drone a pre-made CAD (Computer Aided Design) reference model of the volume

- Allow the drone a “mapping flight” through the volume and use the resulting data as a map.

The idea of a mapping flight is to gather LiDAR data from enough positions inside a volume, that it can be combined into a 3D point-cloud map of the entire volume. Making a robot safely navigate an environment it has no prior knowledge of, is known as Simultaneous Localization and Mapping (SLAM). It is the key to autonomy for any aspiring can-do inspection robot.

The CAD model represents a “perfect” reference and preprogramming a path would allow users to refine the path to be as safe and efficient as possible. This involves some desktop work, but just once. It also makes a lot of sense and is within the scope of the REDHUS project.

But the scenario of relying on just the mapping flight is also very appealing since it unlocks simplified path-planning in the future. Imagine just flying through a volume once, plotting points A, B, C etc. as POIs and leave the drone to do the rest for all future inspections. You could simply “replay” the path from the first flight and re-visit the same POIs as marked during that flight. You would get consistent visual data every time, suitable for trendspotting and automated differential analysis down the line.

Online SLAM

As said, SLAM is the key to climbing the autonomy ladder. Whether crawling, running, rolling, or flying. SLAM is not a specific, copyrighted piece of technology. It is a collective name for the computational problem of constructing or updating a map of an unknown environment, while at the same time keeping track of your location within it.

Many SLAM implementations require a very large dataset to be available at compute-time and are very computationally intensive. This is where SLAM forks into two branches:

- Online SLAM is active while the drone is in flight and prioritizes object detection and -avoidance. It needs to be fast.

- Offline SLAM happens after the drone has landed, prioritizes map quality and is not concerned about robot control. It can take time to run complex algorithms to align to specific geometric features.

For these reasons, SLAM for the sake of full, detailed mapping is often done post-inspection and offered as part of desktop or cloud software.

But the better your online SLAM algorithm becomes, the better the drone’s potential to navigate and operate in spaces it has not visited before. Even without having a CAD model for support. Relying on its SLAM implementation, the drone can for example calculate a good path back to the entry point, by itself. Or find the best path between any two points inside any volume. It may continue to refine and extend paths as it proceeds through the volume until it has “seen” all of it. A pilot may supervise the inspection simply by pointing to locations in a 3D point cloud.

Walk the talk

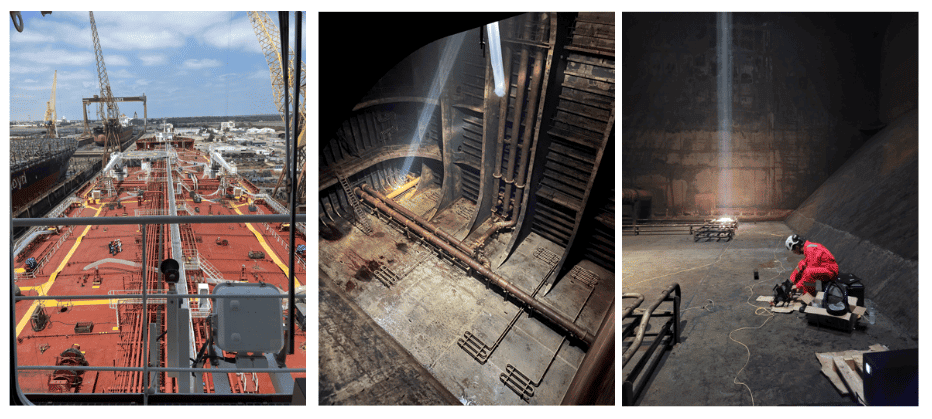

Any SLAM implementation with real-life operational ambitions must be thoroughly tested to verify that it will be ready and able when facing the real world. What better place to do it in this case, than inside an actual crude oil tanker?

Beothuk Spirit

The “Beothuk Spirit” typically operates offshore on the East Coast of Canada, under Canadian flag. It belongs to a class of vessels that match the REDHUS project scope well and is owned by our very supportive project partner Altera. While docked in Portugal, the crew therefore welcomed us onboard for some important field tests.

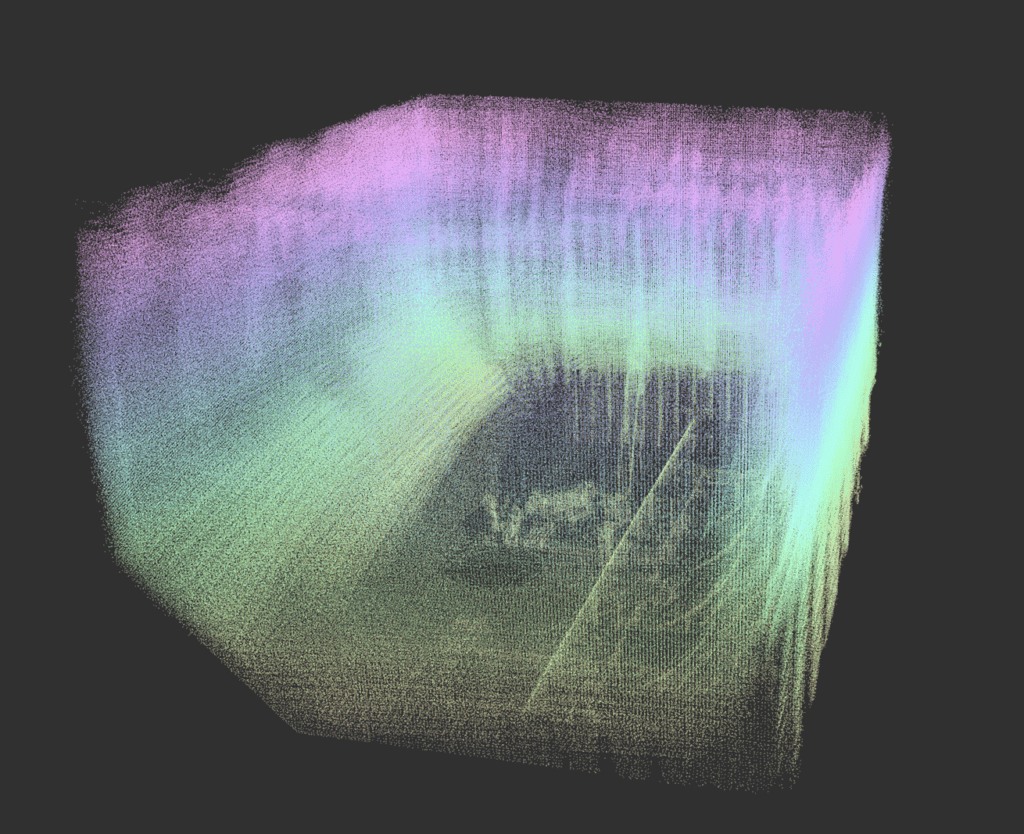

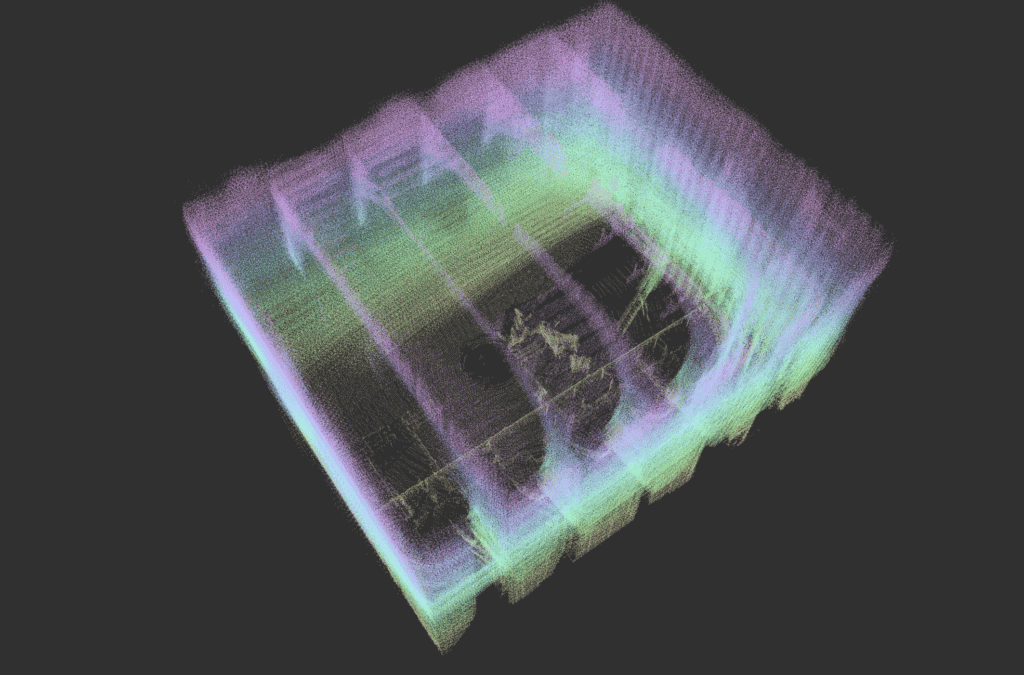

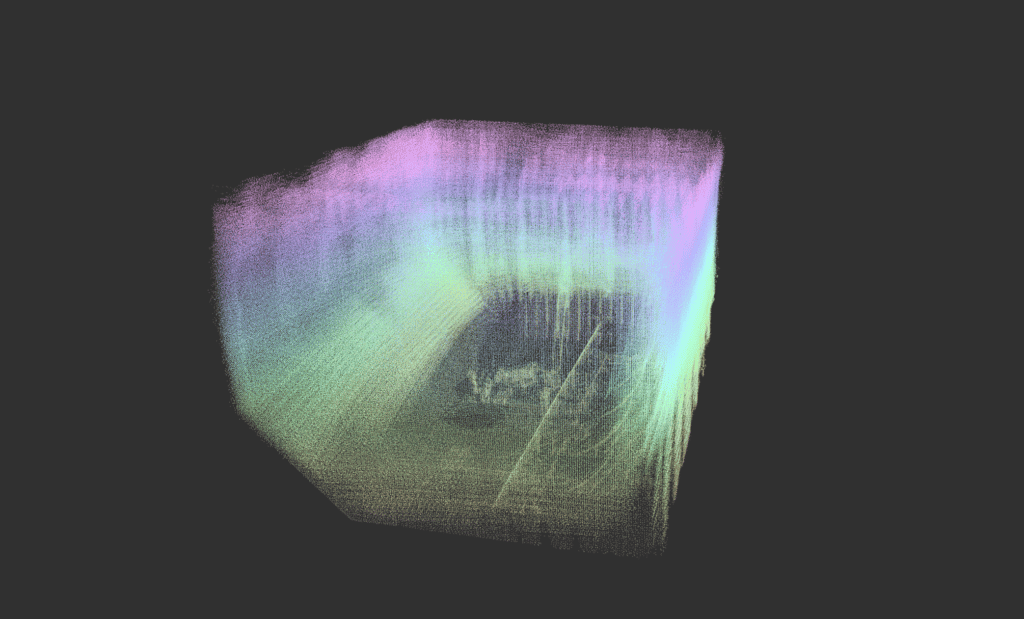

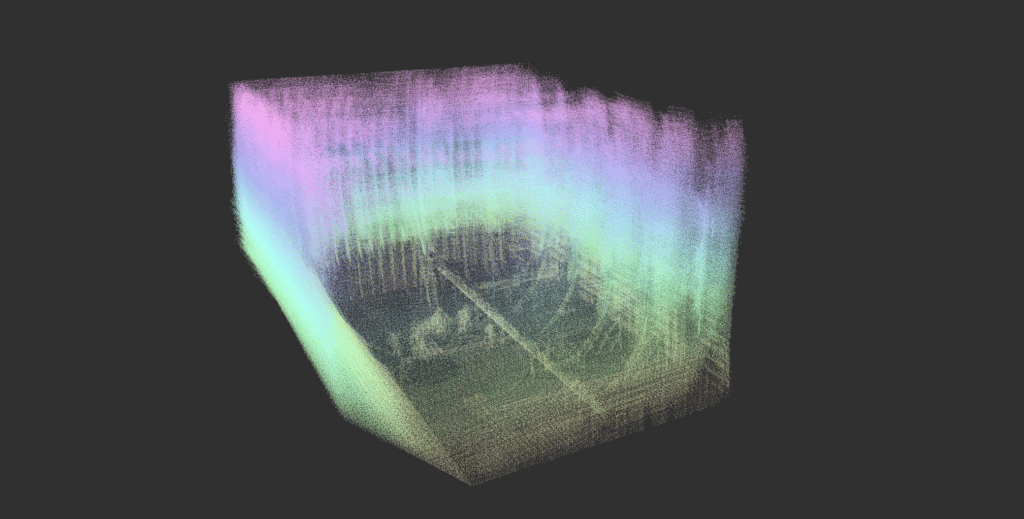

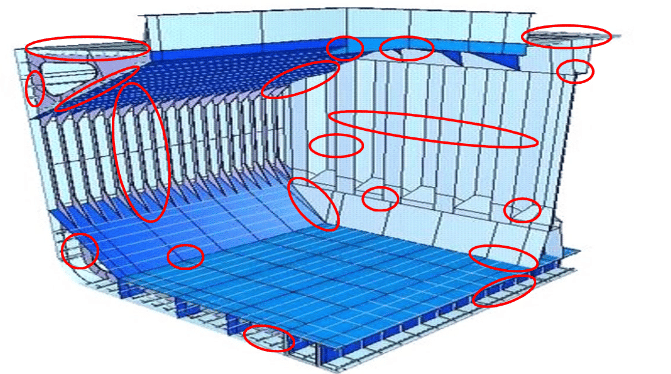

The cargo tanks of vessels like this are often very tall. And looking at the cargo tank in the photos above, it is evident that two vertical cross-sections mid-height will seem very similar to a LiDAR sensor. All surfaces and edges like plates, stiffeners, ladders and pipes must be exploited as contributing features to the drone’s processing engine.

Make a map that fits the map

Our main mission on the “Beothuk Spirit” was to gather relevant data to accelerate the work on mapping of an entire cargo tank volume. Additionally, we would evaluate data quality, time consumption, and investigate the best way to inspect some specific cargo tank structures.

In the REDHUS project, the POIs will be defined via a computer interface using the CAD model as reference. Once those points are translated to the drone’s “online” SLAM point cloud, they must match the exact position well enough and there must be free line-of-sight between the drone camera and the POI.

So, specifically we needed to:

- Ascertain alignment of the LiDAR point cloud from the Scout 137 Drone with the CAD model, so that the coordinates of POIs on the CAD model correspond to coordinates in the drone’s point cloud.

- Prove that the Scout 137 Drone can build a full LiDAR point-cloud where all features match the real-life inspection target well enough.

- In summary, prove that when CAD model POIs are translated to the drone’s LiDAR point cloud, the drone can locate the exact real-world POIs, go to them and point the camera at them.

Thankfully, our SLAM implementation proved its worth with convincing results inside the “Beothuk Spirit” and we now know that the drone’s built-in localization is able to use a CAD-based map with high real-world accuracy. In context of the REDHUS project, this means that the next step for ScoutDI, is to make the drone actually follow a pre-drawn path between the CAD-based POIs.

You can see the principle in the video below: In this screen recording from the Scout Simulator, a virtual Scout 137 Drone follows a path generated from a CAD model. In the simulation though, the drone is also using the CAD model as a reference map and not it’s own LiDAR sensor output. So we’re basically testing that the path is obstacle-free and visits all the POI in a sensible way.

Now we just need to move a whole lot more electrons and do this in real life, in a real tank, with a real Scout 137 Drone System.

The ceiling is the limit

So, the secret sauce of good autonomy is not buried in public domain algorithms or off-the shelf software. There is no magic button or silver bullet. You need to acquire domain knowledge and continuously refine the technology, taking the intended application into account. It’s a time-consuming process that takes lots of people and lots of work – but as demonstrated in REDHUS, it pays off!

ScoutDI is connected to top experts in the industry and academia, such as Professor Kostas Alexis and his team at the NTNU Autonomous Robotics Lab (located near ScoutDI headquarters), who were part of the winning team of the DARPA Subterranean Challenge. This team has contributed with significant improvements to the exploration, location and mapping algorithms.

DNV has achieved measurable progress in automatic defect detection. Their corrosion coating condition algorithm, which you can see in the REDHUS video at the beginning of this article, is now at a level where it is considered to have performance on par with human surveyors.

We are also connected to heavy industry players in class, maritime industries and the inspection business and have robotics, autonomy and computer vision competence in-house. We are propelling technology forward by combining forces through collaborative research projects, like REDHUS.

Very exciting things result from that and a path-following, self-piloting inspection drone is a pretty cool example.

What’s next?